Continued Process Verification (CPV) Signal Responses in Biopharma

This paper was written by members of the BioPhorum Operations Group CPV and Informatics team and widely reviewed across the BPOG collaboration. As such, it represents the current consensus view of process verification subject matter experts in the biopharmaceutical industry, but does not represent the procedural details of any individual company. It is designed to be informative for industry members, regulators, and other stakeholders. It does not define statistical methods in detail, as these definitions are readily available elsewhere.

This paper describes how signals can be developed and evaluated in support of CPV in the biopharmaceutical industry. Implementation of CPV, in addition to meeting regulatory expectations, can also provide a basis for continuous improvement of production processes and hence greater consistency of product quality and assurance of supply.

CPV involves gathering data related to CQAs and CPPs, as well as analyses that reveal any statistical signals that become evident over time. It is designed to detect variation within specifications. Thus, CPV is about maintaining control within specification and so does not normally lead to a formal investigation. This paper provides several examples of signal response and escalation within the quality system where necessary as a model of a risk-based approach to CPV.

In 2011, the FDA introduced guidance on the process validation life cycle, including continued process verification (CPV).1 While implementation is becoming a regulatory expectation, CPV can provide benefits beyond compliance by identifying opportunities to improve production processes and ultimately, the reliability of drug quality and supply.

CPV is the third stage of the process validation life cycle. It is a continued assessment of the parameters and attributes within the control strategy identified in Stage 1 (process design) and refined at the end of Stage 2 (process qualification). Its principle objective is to detect variability in the process and associated quality attributes that may not have been evident when the process was characterized and introduced. CPV provides continued verification that the control strategy remains effective in maintaining product quality.

Additional parameters and/or attributes not considered critical to quality or otherwise not specified in the control strategy may also be included in the CPV program (or an associated process monitoring program) to enhance process learning and support investigations to identify the root cause and source of unexpected variability.

CPV can also identify opportunities to improve process performance and/or optimize process control. Using statistical methods, data from historical manufacturing or characterization studies are evaluated during CPV implementation to define signal criteria, set limits, and implement appropriate response procedures for ongoing operations. Signals are thus selected to identify process behaviors of interest and indicate when statistically meaningful variation may be affecting the process.

A critical aspect of CPV is establishing a procedure that provides a consistent response to these signals as they become evident. Ideally, perhaps, signals would be detected as soon as they occur, but this is not practical in most cases. Practically, the signal should be detected and a response mounted before the indicated trend leads to a true process deviation or out-of-specification (OOS) event. Good practice is to respond as soon as possible based on risk assessment.

The purpose of this document is to provide best practice guidance for responses to CPV signals that occur within the process’s acceptable control space.

There are five main steps in establishing CPV signals and associated response procedures.

- Define parameters and signal criteria

- Establish monitoring and evaluation frequency

- Establish signal evaluation criteria and actions

- Escalate actions if necessary

- Document signals and response

Although this paper is focused primarily on responses, some discussion of signal selection is required since the magnitude of the response should be commensurate with the severity of the signal.

Signal Selection

Define parameters and criteria

In general, CPV signals assess predicted performance based on previous process experience. The development and effectiveness of these signals depend on statistical techniques sensitive to the size and inherent variability of the existing data set. While not ideal, some signals will be due to existing and acceptable variation that was not fully captured and characterized in the initial data set. This is a common occurrence in biopharma, given the complexity of manufacturing processes and raw materials. Although these are sometimes referred to as “nuisance signals” or “false positives,” such signals may prove to be useful learning opportunities over time and used to augment the data set.

Definitions

Capability indicator -The ability of a process to deliver a product within specification limits. The concept of process capability can also be defined in statistical terms via the process performance index, Ppk, or the process capability index, Cpk

Continued process verification (CPV) - A formal process that enables the detection of variation in the manufacturing press that might have an impact on the product. It provides opportunities to proactively control variation and assure that during routine production the process remains in a state of control.1

Control strategy - A planned set of controls, derived from current product and process understanding that assures process performance and product quality. The controls can include parameters and attributes related to drug substance and drug product materials and components, facility and equipment operating conditions, in-process controls, finished product specifications, and the associated methods and frequency of monitoring and control

CPV limit - Limit derived statistically, or justified scientifically, for use in process trending. Limit is meant to predict future process performance based on past performance experience and is not necessarily linked to process or patient requirements. In a capable process, CPV trend limits will be tighter than other limits, ranges, or specifications that are required by the molecule’s control strategy.

Critical process parameter (CPP) - A process parameter whose variability has an impact on a critical quality attribute and therefore should be monitored or controlled to ensure the process produces the desired quality.5

Critical quality attribute (CQA) - A physical, chemical, biological, or microbiological property or characteristic that should be within an appropriate limit, range, or distribution to ensure the desired product quality.

Escalation -To respond to a signal by following the deviation/nonconformance system to investigate for potential product or process impact.

Evaluation - An analysis of data and related circumstances around a statistical signal, with the intent of identifying the cause of the signal.

Noncritical parameter (NCP) - A noncritical parameter has no impact on quality at the process step in question. Note: It may have an impact on the performance of the next process step and so may be monitored for process control purposes.

Quality management system - The business processes and procedures used by a company to implement quality management. This includes, but is not limited to, investigations from process and laboratory deviations, commitments, and change control.

Signal An indication of unexpected process variation, triggered by a violation of a predetermined statistical rule that is used to identify special cause variability within a process. Process behaviors with assigned signals include (but are not limited to):

- Outlier (such as Nelson rule 1)

- Shift (such as Nelson rule 2)

- Drift (Such as Nelson rule 3)

Triage An initial read on the signal by SMEs to determine if the signal should fall in the default category, and be either escalated or de-escalated.

Identify variation

The focus of CPV signals should always be to identify variation within specified limits defined in the control strategy. This applies when critical quality attributes (CQAs) are maintained within specifications and critical process parameters (CPPs) are maintained within proven acceptable ranges. Signals that are outside the control strategy (i.e., OOS) are investigated primarily within the quality management system (QMS).

This paper is concerned primarily with evaluation of responses to CPV signals within the design space. There would be benefit, however, in capturing and integrating lessons learned from both formal investigations and CPV-related evaluations for improved long-term process control.

Establish monitoring and evaluation frequency

As stated previously, the ideal scenario for CPV monitoring is to identify signals in real time during manufacturing and react accordingly. This scenario is not always practical, however, since that many signals require multiple data points (i.e., signals for drift or shift) and specific data-capture and analysis technologies are required to perform the calculation. Given that these signals are by definition within the specified limits defined in the control strategy, the risk inherent in disassociating the identification and reaction to signals from batch release is low, allowing for a more periodic review.

The review frequency should be established with the monitoring plan and should consider:

- Relative risk of a parameter or attribute deviating from its acceptable range

- Manufacturing frequency

- Level of historical process knowledge

- Manufacturing plant’s technological capability to collect and analyze the data

Establish evaluation criteria and response

A CPV signal is designed to identify potential new variation or unexpected patterns in the data. Because these conditions are within the control strategy, signals should not automatically be considered formal good manufacturing practice (GMP) deviations. There may be cases, however, in which a signal is significant enough to indicate a product quality or validation effect that requires tracking and resolving within the QMS. When this happens, a cross-functional data review and escalation procedure should be in place to ensure the signal is addressed appropriately.

This paper provides an example of a procedure that can be used or adapted for responses to CPV signals using risk-based decision-making to determine when a signal should be escalated. The procedure has four key elements that should ideally be in place as prerequisites (Table A).

| Element | Description |

|---|---|

| CPV plan with signal criteria identified | Plan outlines the parameters analyzed for CPV and rules for identifying signals. This provides guidance on what process behaviors merit further analysis. |

| Default responses to signals and parameters | Default responses are predetermined actions for each parameter/signal combination. These are based on the criticality of the parameter and the nature of the signal to ensure the response is commensurate with the level of risk being signaled. |

| Data signal review and escalation process | Signals and their default responses should be reviewed periodically by a cross-functional team of SMEs to evaluate the appropriateness of the default response and determine if alteration (escalation or de-escalation) is needed. |

| Documentation system | Signal response and rationale must be documented and approved. |

| Action | Description |

|---|---|

| No action | No response required. This response is associated with signals that are not considered significant enough to warrant further root cause analysis and require no corrective or preventive action (CAPA). Document the decision and rationale per approved procedures. |

| Evaluation | This response is associated with signals of unexpected variation from historical processing experience that are considered significant enough to warrant a technical evaluation to understand the cause of variance; it is not significant enough, however, to warrant a product quality impact assessment. A subsequent CAPA may be required. Evaluations can span a wide spectrum of complexity, from a simple review of a batch record or starting raw materials to a complex, collaborative, cross-functional evaluation. The size of the evaluation is based on the technical input of process SMEs. |

| Escalation to QMS | This response is associated with signals of unexpected variation from historical processing experience that are considered significant enough to warrant a technical evaluation to assess potential product/validation impact and establish a root cause. A subsequent CAPA may be required. The signal response is tracked within the QMS and requires a product/validation impact analysis, root cause identification, and any associated CAPAs within the timelines mandated by the relevant quality procedures |

Cpv Plan

At the completion of Stage 2—process performance qualification (PPQ) 2 —a CPV plan shall be established with the following components, including a rationale for each:

- Parameters and attributes to be monitored

- CPV limits for each parameter and attribute combination

- Frequency of trend evaluations

- Statistical signals to be evaluated

- Default responses for each parameter-signal combination

The rationale can be risk based and should include an explanation of which process behaviors may merit further analysis. Ideally, each default response should be determined from a risk-based strategy that considers the criticality of the parameters, the nature of the signals, and the performance/capability of the process parameters.

The CPV plan should reference company-specific procedures that specify reporting formats, designate escalation procedures, identify roles and responsibilities for CPV trending, and define terms.3 For illustration, this paper uses the signal-response action terms shown in Table B.

Default responses to signals

Table C provides an example of a risk-based strategy that could be used to determine minimum default responses for each parameter and signal in the CPV plan. The example uses classic signals, which indicate departures from established behavior for normally distributed, independent data. Actual strategies may vary by CPV plan. The default response assigned for an individual parameter-signal combination may vary from the proposed default if a proper justification is provided in the plan.

Table D illustrates how default responses and modifications can be presented in a CPV plan.

Escalation process

During CPV plan execution, data is collected and analyzed at a predefined frequency. Once a signal is identified, a cross-functional team (CFT) of subject matter experts (SMEs) with knowledge of the process, manufacturing operations, quality control, and/or quality assurance reviews the signal to determine the appropriate response. Others, such as quality control laboratories, regulatory sciences, or continuous improvement and process development may also participate.

The CFT reviews the signal against the default response, and determines if escalation to a higher level or de-escalation to a lower level of response is appropriate. Factors that may be considered when altering the default response include (but are not limited to) the CFT possible review outcomes shown in Table E.

When product is manufactured at more than one site it is advisable to have a system to share CPV data and or observations. In all cases alteration of any default response to signals requires justification and proper documentation.

Document signals and responses

Once the CFT triage is complete and outcome aligned, the team will perform the recommended actions or ensure they are done. If the action is to escalate, the appropriate quality system document will be initiated and procedures that govern it will be followed. If the action is evaluation, additional analysis or experimentation will be required to determine the cause of the signal.

Results of any evaluations should be documented following GMP principles. If no action is taken, that decision and rationale must also be documented, but no further action is required.

Signal and response documentation typically falls within one of the types described in Table F.

Any changes to control limits, signals, or processes that result from evaluation should be managed by the system most appropriate for the change (i.e., change control or CAPA). Quality approval is required to close out a response to signal for all three categories (escalation, evaluation, no action).

| Signal | Signal Type | CQA* | CPP | NCP |

|---|---|---|---|---|

| Outlier | Nelson Rule 1: 1 point outside of a control limit | If process capability is acceptable,† evaluation | Evaluation | No action |

| Western Electric Rule 1: 1 point outside of a control limit | If process capability is marginal, escalation | |||

| Shift | Nelson Rule 2: 9 consecutive points on same side of center line | If process capability is acceptable, evaluation | Evaluation if process capability is marginal | No action |

| Western Electric Rule 4: 8 consecutive points on same side of center line | If process capability is marginal, escalation | |||

| Drift | Nelson Rule 3: 6 consecutive points, all increasing or all decreasing | If process capability is acceptable, evaluation | Evaluation if process capability is marginal | No action |

| Western Electric Rule 5: 6 consecutive points, all increasing or all decreasing | If process capability is marginal, escalation |

* Where existing procedures require formal quality investigations, those procedures supersede this strategy (e.g., OOT/OOS). Where possible, CPV plans should be aligned with OOT procedures.

† Acceptable and marginal process capability can be defined in a procedure, in statistical terms.3 ,4

| Parameter | CPV plan response | Comment | ||

|---|---|---|---|---|

| Outlier | Mean shift | Drift | ||

| NCP1 | No action | No action | No action | N/A |

| NCP2 | No action | Evaluation | Evaluation | No impact to quality. Evaluate shifts and drifts to limit business impact. |

| CPP1 | No action | Evaluation | Evaluation | No action for outliers due to high process capability. |

| CPP2 | Evaluation | Evaluation | Evaluation | Marginal process capability |

| CQA1 | Escalation | Escalation | Escalation | Escalate all signals due to marginal process capability |

| CQA2 | Evaluation | Evaluation | No action | No action for drift signals due to inherent drift in the process. Escalation not required for outliers or mean shifts due to acceptable process capability. |

| Factor | Potential outcome |

|---|---|

| Compare signal to historical performance | Escalate if the data is significantly different from historical data or is unusual based on SME knowledge of process performance |

| Proximity of the data point to specifications | Escalate if the CFT concludes there is a risk of OOS |

| Recurrence of similar signals | Escalate to determine the cause |

| Signals for multiple parameters and/or attributes in the same lot | Escalate to determine the cause and any potential process impact not highlighted by CPV trending |

| Related events within the quality system | De-escalate if an attributable cause has been identified and investigated in the quality system |

| Related planned deviation, technical study, or validation protocol | De-escalate if the signal is attributed to the related study. Exceeding an existing time limit, for example, as part of validating an extension of a unit operation’s hold time. |

| Document Type | Description |

|---|---|

| Form | Can be used on a lot-to-lot basis to explain special causes and document their effects on the product and/or process. Form comments can also be summarized in CPV reports. Information may also be captured in a database, typically outside the QMS. |

| Meeting minutes | Used to document periodic reviews of CPV trends, signals, and discussions of the CFT responsible for the reviews. If part of the established periodic CPV review, meeting minutes should be approved by QA and stored in a formal document control system. |

| Technical report | May be used to document an evaluation, as directed by the CFT. A report is typically used to document additional data gathering and/or analysis outside the scope of a periodic CPV report. |

| CPV report | Used to summarize all signals and attributable causes. May include brief discussions for readily explained signals that do not require evaluation in a technical report or a quality record. |

| Quality system record | When the CFT decides to escalate to QMS, a record within the quality system is initiated to track the root cause investigation and product quality impact assessment. This record may involve differing levels as a result of the root cause investigation and the outcome of the product impact assessment. This record should be referenced in the CPV report. |

Sample Responses

The following scenarios, frequently encountered while performing CPV activities, offer guidance on assessing and responding to observed signals under similar circumstances. They are classified into three categories:

- Default response vs. modulated response

- Addressing long-term special cause variations during control chart setup

- Signals indicating improper control chart setup

Default response vs. modulated response

In these three examples the CFT, after routine review, must decide whether to proceed in accordance with the prescribed default response or modulate the response.

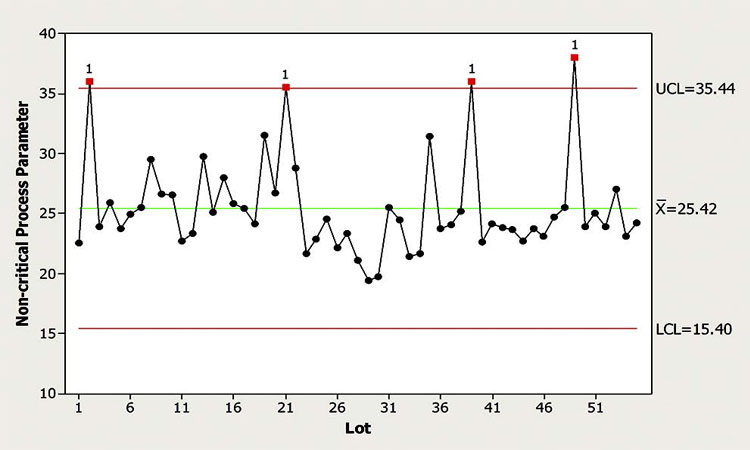

Example 1: Default response

The CFT is monitoring a noncritical process parameter (NCP) using a control chart. By definition, NCPs have no effect on any critical quality attribute over a wide range of operation. They may be step yields, in-process hold durations for stable intermediates, or final cell density in seed steps. Typically, NCPs are monitored as performance or process consistency indicators that could have practical or financial implications. While trend signals of such parameters have no effect on quality, monitoring them offers an opportunity to learn and collate process knowledge. Observed signals for NCPs may indicate suboptimal operation or undesirable process changes.

- 1 a b U.S. Food and Drug Administration. “Guidance for Industry. Process Validation: General Principles and Practices.” http://www.fda.gov/downloads/Drugs/.../Guidances/UCM070336.pdf

- 5International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use. “Development and Manufacture of Drug Substances (Chemical Entities and Biotechnological/Biological Entities): Q11.” 1 May 2012. http://www. ich.org/fileadmin/Public_Web_Site/ICH_Products/Guidelines/Quality/Q11/Q11_Step_4.pdf

- 22. Boyer, Marcus, Joerg Gampfer, Abdel Zamamiri, and Robin Payne. “A Roadmap for the Implementation of Continued Process Verification.” PDA Journal of Pharmaceutical Science and Technology 70, no. 3 (May-June 2016): 282–292.

- 3BioPhorum Operations Group. “Continued Process Verification: An Industry Position Paper with Example Protocol.” 2014. http://www.biophorum.com/wp-content/uploads/2016/10/cpv-case-study-print-version.pdf

- 4Oakland, John. Statistical Process Control, 6th ed. Routledge, 2007

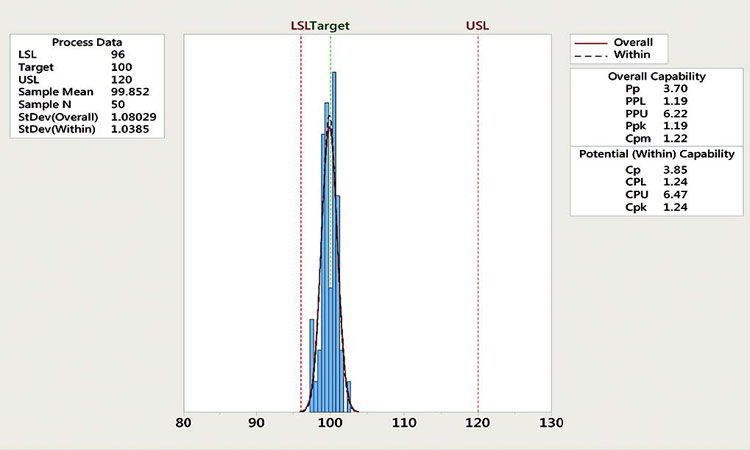

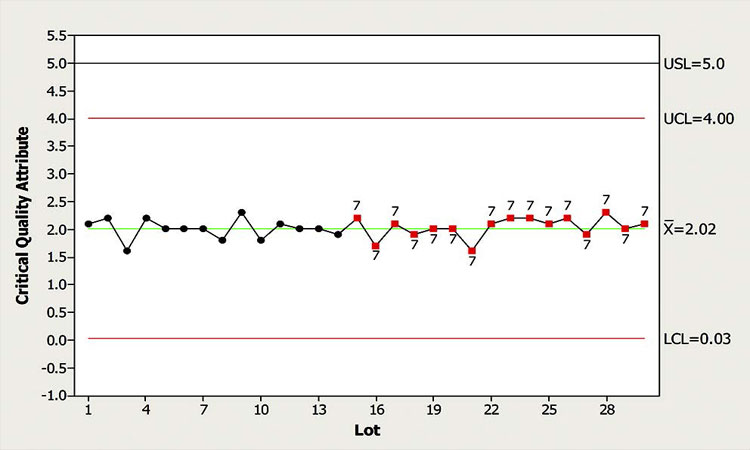

In this scenario, the control chart shown in Figure 1 indicates that the monitored NCP is typically within the control limits, with a few exceptions where outliers are observed. The CFT believes that this NCP is well understood and all previous excursions were explained. According to the CPV plan, the default response for the observed outlier signals is no action.

The CFT wants to decide whether to escalate the response for the most recent outlier signal. Examining the figure, and considering SME’s input, a member of the CFT argued that neither the magnitude of the excursion is exceedingly alarming nor does the frequency of the outlier signals seem to have increased. Given this conclusion, a reasonable course of action in this case was to follow the default response of “no further action is required.” An additional consideration is that the noted excursion may be considered part of common cause variation, therefore a reassessment of control limits may be warranted.

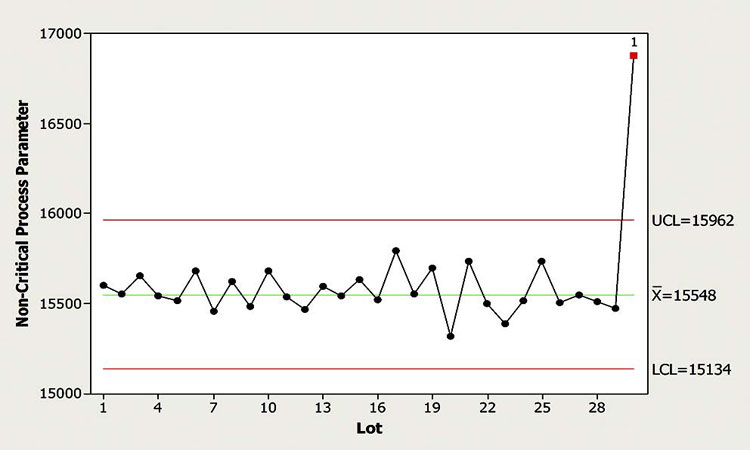

Example 2: Escalated response

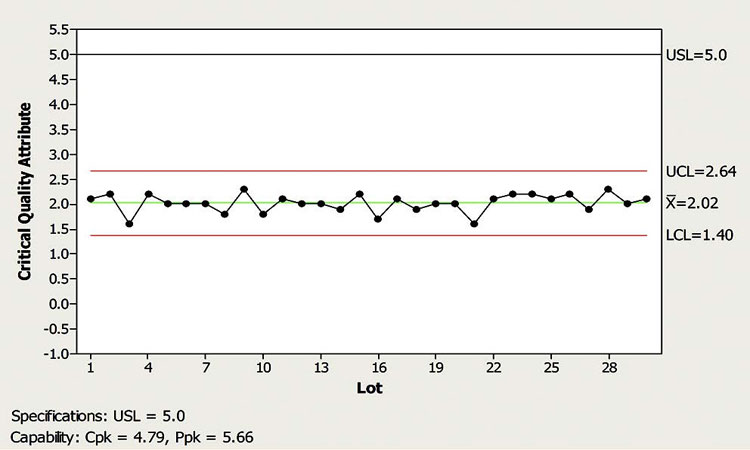

In this scenario (Figure 2), a well-behaved NCP is monitored for outliers, shifts, and drifts. An outlier signal was observed for the latest batch manufactured. Upon review, the CFT concluded that the magnitude of this excursion was of significance, compared to recent manufacturing experience. The CFT was also concerned because outliers were not frequently encountered for this parameter, so there was little process knowledge with respect to the impact of this one, especially considering its magnitude. While the default response for outliers of this particular NCP is no action, the CFT determined that such a significant outlier signal should be escalated to evaluation to determine its cause. Escalation to QMS was not deemed necessary since the associated critical parameters were well within the control strategy.

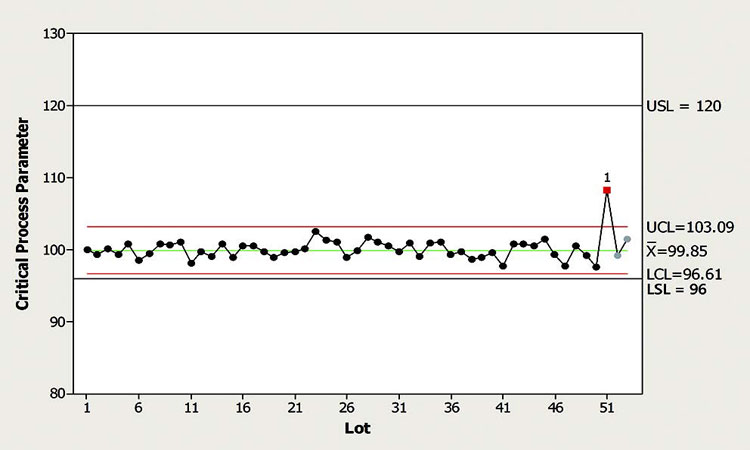

Example 3: Reduced response

In this example, a CPP is being monitored for outliers, shifts, and drifts. The control chart in Figure 3 shows that the CPP experienced an outlier signal for the third-most-recent batch produced. However, the dominant observation from the chart is that this CPP is very well behaved from a statistical perspective.

Figure 4 shows that the process capability for this parameter is marginal (1.00–1.33). Because the direction of the excursion is away from the closest specification limit (the lower specification limit or LSL), however, it does not signify a risk to process capability.

According to the CPV plan, the default response for outliers of this CPP is evaluation. To determine if the default response is appropriate, the CFT considered several factors: the magnitude and frequency of outliers, the direction of the excursion in relation to process capability, and the lack of similar outlier in the next two batches. The CFT determined that this single outlier with relatively small magnitude is well within the specification limits and poses low risk to product quality. Therefore, the team decided that further evaluation is not necessary, reducing the response in this case to no action.

Addressing long-term special cause variations

Many parameters and attributes experience long-term variation due to special causes; these include changes in raw materials over time, aging equipment, campaign-to-campaign variation, and cumulative process, equipment, material, and test method changes. Addressing such long-term variations depends on the nature of the variation, its frequency, and the ability to identify or predict it.

Treatments generally fall into one of two categories: If the special cause can be identified and doesn’t change too frequently, the control chart can be stratified at the different levels of this special cause, otherwise, a relatively large data set should be used when setting control chart limits. It should be large enough to fully express the voice of the process, including the effect of special causes on long-term variation.

Control chart stratification is desirable when the special causes for the long-term variation are easily identified and have low frequency. This allows for a statistically meaningful number of data points within each stratum. Examples of special causes that can be treated through stratification include (but are not limited to): variations due to campaign manufacturing; variations caused by the introduction of significant changes to the manufacturing process, equipment, or test methods; and variations due to changes in materials (such as chromatography resins) that affect a number of subsequent batches.

If, on the other hand, the materials changes are too frequent or the long-term variation is gradual due to small cumulative changes, stratifying the control chart becomes impractical and challenging. The best approach in the case of too-frequent changes is to build a robust control chart with relatively large data set that demonstrates these variations. Temporary control limits are initially established and then recalculated at some frequency by introducing additional data until the SMEs feel that the data set expresses the true voice of the process, including long-term variation. The CFT may also consider “no action” for shift signals since these are expected for parameters that are sensitive to long term variation.

Example 4: Control chart stratification

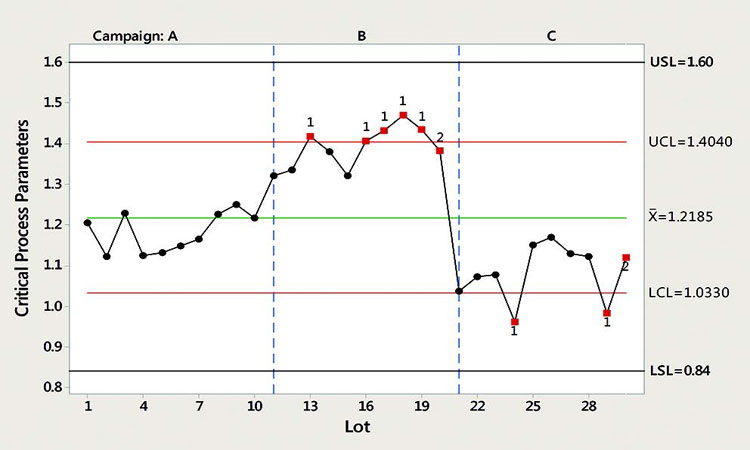

In this example, a CPP for a product that is manufactured in campaigns is monitored for mean shifts, drifts, and outliers. Figure 5 shows a control chart of the CPP over three 10-run campaigns (A, B, and C in the top axis). In this case, it is typical that several months may elapse between successive campaigns. Different products/processes are often run in the interims.

Signals for multiple mean shifts and outliers became evident with continued production. Because the parameter was a CQA, the signal was triaged against a default response of “evaluation.”

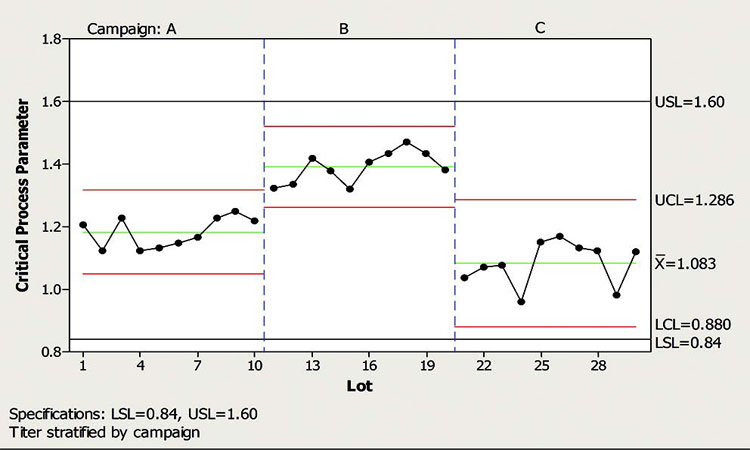

The important consideration is the existence of subtle shifts from one campaign to the next. These can be the result of different raw material batches, new column packs, etc. All are considered normal process variables, but when viewed on a campaign basis they can display marked shifts in the process. To account for the campaign effect on the CPP and avoid false signals, the most appropriate treatment for the control chart was to stratify it per campaign, as shown in Figure 6.

Important considerations:

- All data are within specifications (0.84–1.60). This can be qualified via capability analyses or simply checked relative to specifications.

- Data within each campaign are considered “in-control” or stable. There are no violations to the run rules as described in previous sections.

- Variation within each campaign is similar. Homogeneity of variance can be checked across all campaigns using various statistical tests. The most important consideration is that the process variation is not getting worse (i.e., wider control limits) with each successive campaign.

- Any shifts between campaigns should be acknowledged and documented in CPV plans and/or more formally in a QMS.

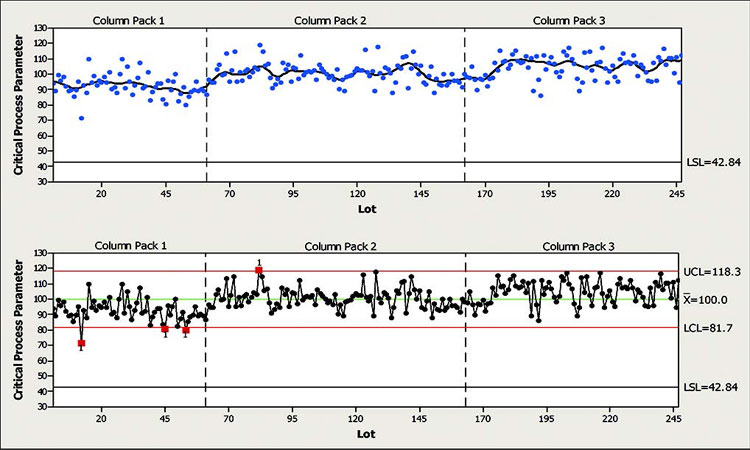

Example 5: Accounting for long-term variation in setting control chart limits

In this scenario, a CPP was found to be sensitive to a number of known and unknown long-term special-cause variations. The top half of Figure 7 shows a scatter plot of the CPP with a line representing a moving average. The figure is also segmented into three parts representing three different column packs. The moving average for this CPP shows slow and somewhat alternating variation within and across the three segments. In addition, the variation within each segment appears to be of the same magnitude, if not larger than the variation between segments. In this case, control chart stratification may help avoid some false positive signals, but will not eliminate them.

Given the long-term dynamics of this CPP, setting control chart limits with a limited data set would create excessive false positives. In cases like this, therefore, it’s a good idea to use the largest practical data set when calculating limits that take into account the natural long-term variation of this CPP. The long-term standard deviation should also be used when calculating limits. The lower part of Figure 7 shows a control chart with limits calculated using the entire data set and without stratification.

Figure 7 indicates that despite the long-term variation observed for this CPP, it is actually a well-behaved parameter. There are few trend signals and the process capability is markedly high, considering the width and location of the control chart with respect to the specifications.

Signals that indicate improper control chart setup

When calculating control chart limits for trending, the idea is to collate a statistically significant data set that captures the common cause variation expected to persist in the future. In practice, though, most CPV plans for new products are created with limited number of batches. Control limits, therefore, may need to be updated once a sufficiently large data set is available.

Even for legacy products, there are cases where the historical data set is not representative of current manufacturing due to cumulative process, equipment, materials, or test-method changes. While it is not advisable to continually and arbitrarily modify the data set baseline and recalculate control limits, applying control limits that do not represent the current manufacturing process is not any better. A balanced approach is preferred, where control limits are assessed periodically and updated when necessary.

Both the mean and variance of monitored parameters and attributes are subject to change; they can also be purposefully introduced as the result of process optimization or continuous improvement. Ideally, the mean should move in the direction of a predetermined target and the variance should diminish over time, in accordance with process knowledge gained and the addition of controls fed back through active monitoring.

One of the advantages of control charts is that signals within them can alert practitioners to changes in mean or variance. One valuable control chart run rule not frequently exploited in the industry is 15 data points in a row, all within ±1 standard deviation of the mean, which indicates that the variance has decreased over time. This behavior is often observed as a wide space between control limits and the mean.

Persistent outlier signals in one direction and/or persistent shift signals can also indicate long-term shifts in the mean. In this case, the parameter in question should be examined to determine if the change is acceptable and new control limits are needed, or if the change is not acceptable and further action is needed to bring the mean back to target. The following two examples illustrate these types of situations.

Example 6: Variance reduction over time

In this example, while monitoring a CPP, the CFT observed a wide space between the control limits and the data, which was clustered around the mean as shown in Figure 8. The signals highlighted on the chart indicate that 15 or more data points are within ±1 standard deviation of the mean.

Such a scenario can result from one of two things: 1) A phenomenon called “stratification,” wherein the samples are systematically pulled from multiple distributions, or 2) the process variation has narrowed, indicating a significant process shift. Both require that control limits be re-evaluated.

Upon further assessment, the CFT ruled out stratification. Reviewing the history of this chart indicated that the control limits were established using a limited data set when the process was first transferred to the site. Documented evidence showed that a number of process improvements and tighter controls were implemented over time. The CFT concluded, therefore, that the current limits were inappropriate and new limits were needed.

Figure 9 displays the same data set, using control limits that reflect the true nature of the data. In this case, control limits were recalculated to reflect the process improvement that led to the improved (narrowed) control limits.

Example 7: Mean recentering and variance reduction over time

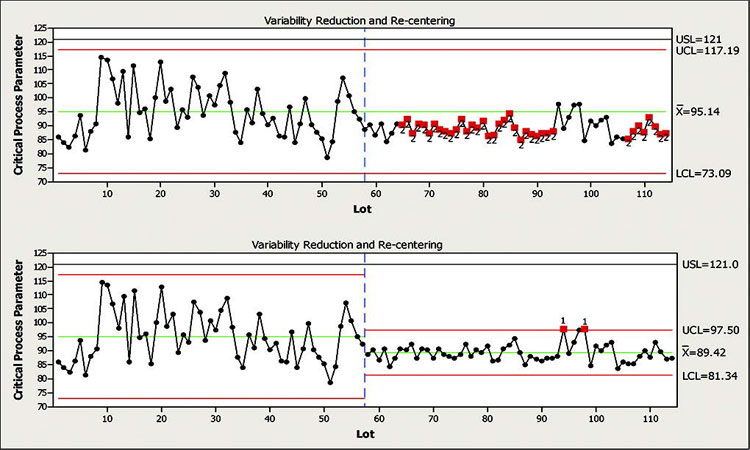

In this example a process had undergone an improvement project to optimize performance and reduce variation. While implementing the changes, the project team decided to maintain existing limits and monitor performance for 15 to 20 lots to see if the change was successful.

Figure 10 shows data from this process where the change was implemented around lot 58. At around lot 70, the CFT reviewed the data and assessed the change as successful. At lot 110, after a period of time that encompassed additional variance factors, such as equipment maintenance and critical raw materials, the control limits were recalculated and are now considered appropriate for future production.

Summary

CPV is an important initiative for the biopharmaceutical industry. Compliance means that statistical signals revealed from CQAs and CPPs should be addressed appropriately. CPV helps maintain product quality, but it is distinct from batch release. Since CPV’s primary purpose is to protect the product from longer-term sources of variation, escalation to the QMS is likely to be rare.

Good practice related to CPV signals involves defining the attributes and parameters to be monitored, along with their associated signal criteria. A set of default responses can be defined, but it is important that signals be reviewed by a CFT familiar with the product and the process. This allows the complexities of the manufacturing process to be considered. Signals may be escalated or de-escalated from their defaults; the rationale for these decisions must be recorded. The review process also provides opportunity for an organization to understand its manufacturing process in greater depth and improve it over time.

Acknowledgments

Andrew Lenz (Biogen), Martha Rogers (Abbvie), Bert Frohlich (Shire), Parag Shah (Genentech/Roche), Ranjit Deshmukh (AstraZeneca), Sinem Oruklu (Amgen), Susan Ricks-Byerley (Merck), and Randall Kilty (Pfizer).